Whether or not the user did click on the product Our model’s prediction on whether or not a user will click the product Table 1: Calculating log loss using a trained classifier for a balanced dataset User ID Using log loss is a good metric if you want to penalize your model for being overly confident.įrom your CTR model, you have following data: However, if the user ends up not clicking on the product then you were likely too confident and your model may need to be adjusted. For example, if you predict there is an 80% chance the user will click the product, then you are pretty confident the actual (or ground truth) will result in a “1” (user does click on product). In order to predict whether or not a user will click on the product, you need to predict the probability that the user will click. You are making this a binary classification problem by stating that there are ultimately two possible outcomes: Let’s say you are a machine learning engineer who built a click through rate (CTR) model to predict whether or not a user will click on a given product listing.

CROSS ENTROPY LOSS FUNCTION HOW TO

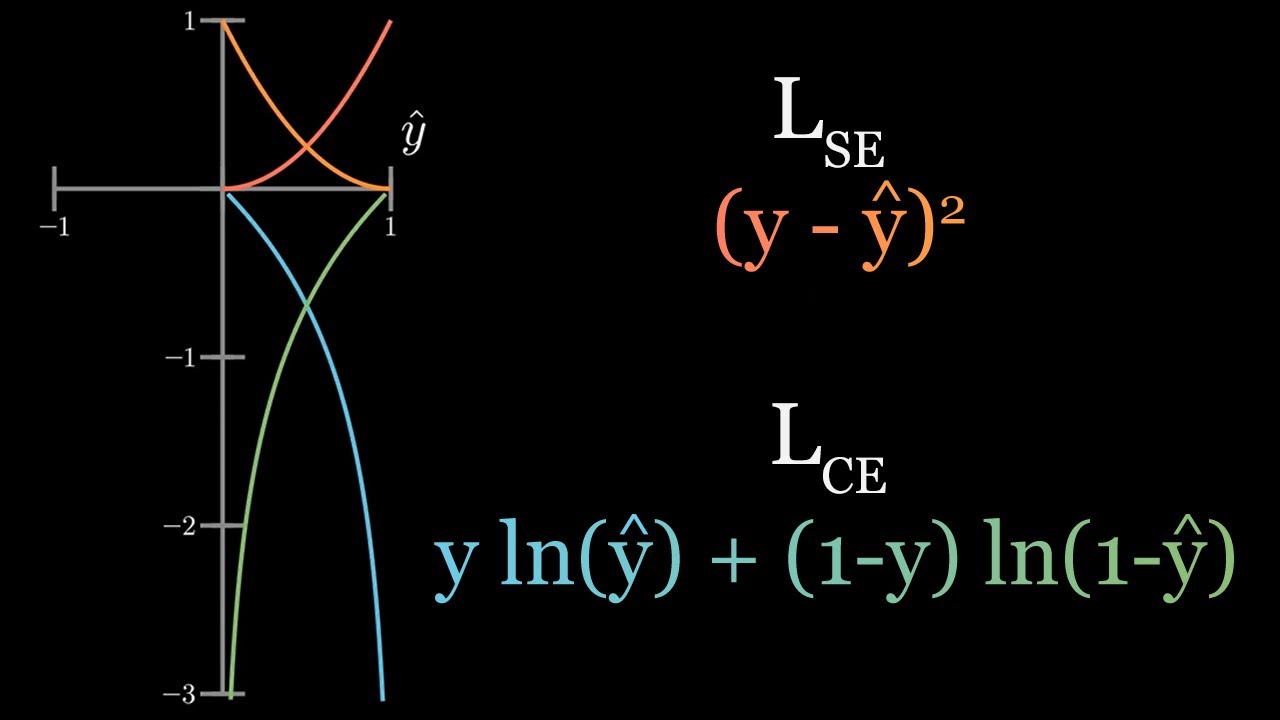

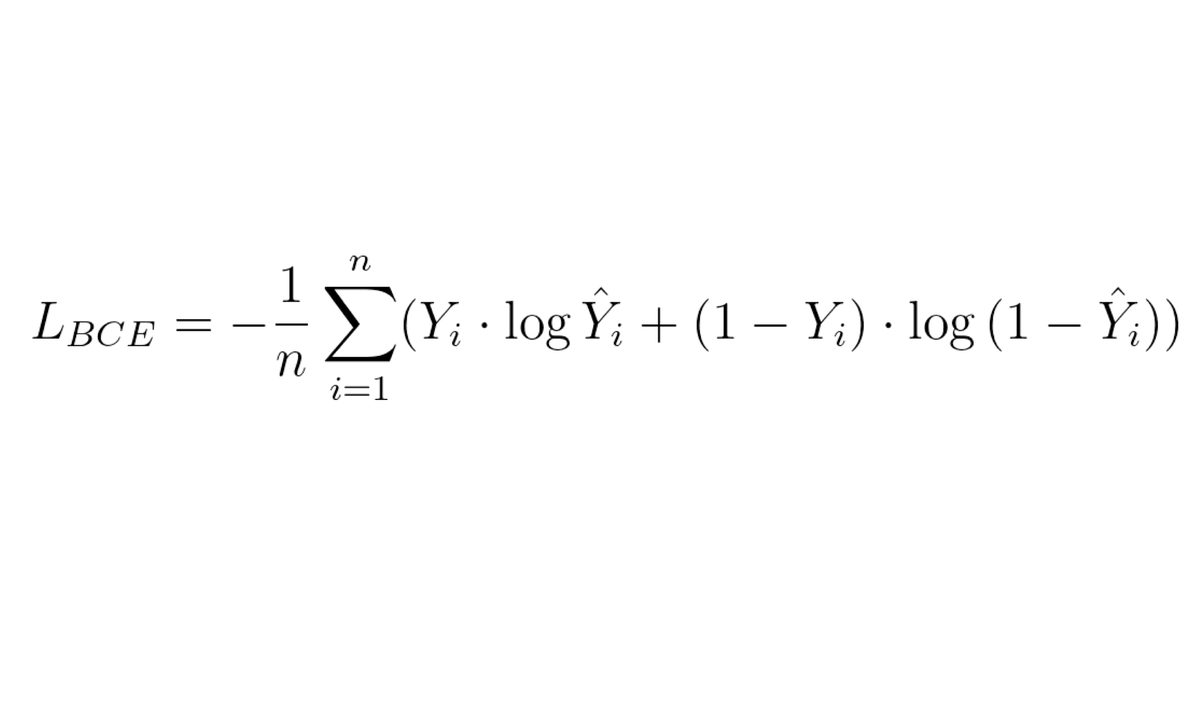

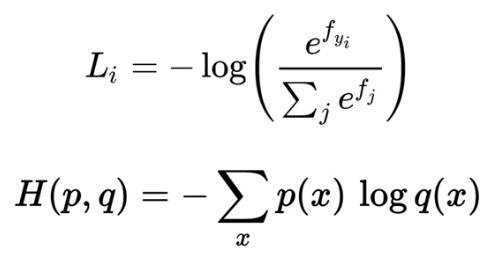

This piece focuses on how to leverage log loss in a production setting. Log loss can be used in training as the logistic regression cost function and in production as a performance metric for binary classification. When Is Log Loss Used In Model Monitoring? Here Yi represents the actual class and log(p(yi)is the probability of that class.

Binary cross entropy is equal to -1*log(likelihood). Low log loss values equate to high accuracy values.

CROSS ENTROPY LOSS FUNCTION SOFTWARE

Building Transparent AI Software Systems.Normalized Discounted Cumulative Gain (NDCG).

0 kommentar(er)

0 kommentar(er)